Table of contents

What Meta Robots are and what they are used for

Where and how is the Robots.txt meta tag implemented?

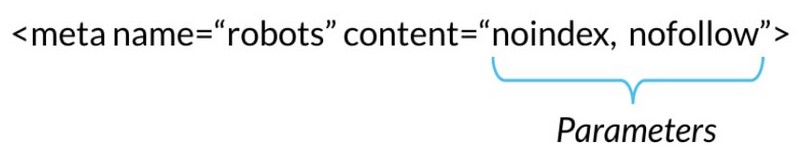

The meta robots tag must be placed in the header area of the web page in question and must include two attributes: name and content to work properly.

Attribute “name”

The name attribute will indicate the trackers to which it applies.

Attribute “content”

The content attribute will indicate how it should behave based on the parameters used.

Meta Tag Example

meta name="robots" content="noindex" />

<meta name="googlebot" content="noindex" />

<meta name="googlebot-news" content="noindex" />

<meta name="slurp" content="noindex" />

<meta name="msnbot" content="noindex" />

Indexing control parameters

- Noindex: It tells a search engine to not index a page.

- Index: It suggests a search engine to index a page.

- Follow: Even if the page is not indexed, we tell thebotto follow all the links it finds, transferring the link juice to the linked pages.

- Nofollow: It tells the crawler to not follow any link from the page in question and to not pass anypage authoritythrough those links.

- Noimageindex: It tells a crawler to not index any images on a page.

- None: It is equivalent to using both noindex and nofollow tags.

- Noarchive: It suggests to search engines to not display the cached version of the page in question.

- Nocache: It is the same as noarchive, but only used by Internet Explorer and Firefox.

- Nosnippet: It suggests to search engines to not show the rich snippets of that page in the search results.

- Unavailable_after: Search engines should no longer index this page after a certain date.

Guidelines for different search engines

However, not all browsers support all directives:

| Value | Bing | Yandex | |

|---|---|---|---|

| index |  |  |  |

| noindex |  |  |  |

| none |  |  |  |

| noimageindex |  |  |  |

| follow |  |  |  |

| nofollow |  |  |  |

| noarchive/nocache |  |  |  |

| nosnippet |  |  |  |

| notranslate |  |  |  |

| unavailable_after |  |  |  |

Example of use and interpretation of directives

The content attribute will indicate how it should behave based on the parameters used.

The most common parameters that we are going to find and useful for SEO are the index tag, noindex tag, follow tag and the no follow tag:

Types of Robots Tags

- Meta robots tags: part of the HTML page located in the < head > section

- The X-robots tag: they are sent by the web server as HTTP headers

Remark: It is not necessary to use meta robots and the x-robots tag on the same page, as it is redundant.

Why they are important

These types of directives, together with the robots.txt file, the canonicals tags, and the X-robots-tag, allow you to control the indexability and crawlability of your website.

Thus, we can optimize the crawl budget and avoid duplicate content errors.

Differences between Meta robots vs. Robots.txt

The main difference between them is that meta robots give indications about the indexing of pages, while robots.txt gives indications about crawling.

SEO recommendations for Meta Robots application

In conclusion:

Important: We must remark that, as with robots.txt files, crawlers are not obliged to follow the instructions indicated by the metatags of your page. They act only as a suggestion.

Frequently Asked Questions

What method should I use to block trackers?

Depending on the case, these are the 3 different ways to block the Googlebot:

- Robots.txt: Use it if the crawling of your content is causing problems on the server or to block sections of the web that we do not want to publish in the Google index, such as the login credentials page to access the administration of your website. However, do not use robots.txt to either block private content (use server-side authentication instead) or manage canonicalization.

- Meta robots tag:: Use this if you need to control how an individual HTML page is displayed in search results.

- HTTP X-Robots-Tag header: Use if you need to control how content is displayed in search results only when you cannot use meta robots tags or want to control them through the server.

When to use Meta Robots instead of Robots.txt

Meta robots tags should be used whenever you want to control indexing at the individual page level. In other words, to ensure that a URL is not indexed, always use the robots meta tag or the X-Robots-Tag HTTP header.

Links and recommended readings:

- Moz. (2022, 2 junio). What Are Robots Meta Tags? Learn Technical SEO. Moz. https://moz.com/learn/seo/robots-meta-directives

- Tutoríal + Guía de las etiquetas meta robots y SEO. (2019, octubre 12). Seoandcopywriting.com. https://seoandcopywriting.com/meta-robots-seo-guia/

- Crear y enviar un archivo robots.txt. (s/f). Google Developers. Recuperado el 5 de enero de 2023, de https://developers.google.com/search/docs/advanced/robots/create-robots-txt?hl=es