When we talk about technical SEO for e-commerce, one of the most powerful —and often underestimated— strategies is the control of product variants. Colors, sizes, materials… each combination generates different URLs that, if not managed properly, can become a real black hole for the crawl budget, link juice, and overall SEO efficiency of the site.

In this article, we’ll explain how to move product page variants to a non-indexed subdomain, without <a href> links, and how, even in this context, you can use these same URLs in Google Shopping without harming your organic positioning.

A strategy that is seemingly simple yet technically challenging that can make a difference in the success of your online store.

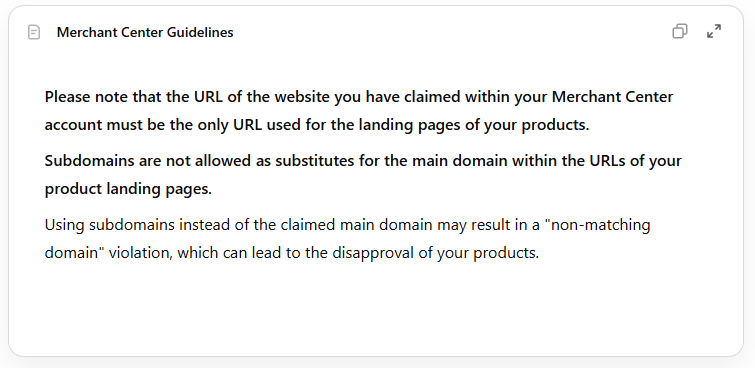

Disclaimer on using Subdomains in Google Merchant:

Read Google’s text in the following image carefully:

As you can see, when working with advanced SEO and Google Shopping strategies through Google Merchant Center, one of the most controversial points is the configuration of the destination URL for products. This can lead to product disapproval and negatively affect the store’s online performance.

The key requirement is that the main domain URL must match that of the products.

Therefore, Google Merchant Center requires that the website URL registered in the account be the only one used in the landing pages of the products. This means that you cannot use subdomains different from the main domain that you have verified in Merchant Center.

Which is exactly what we’re proposing.

So? Why are we giving this solution?

Simple, using the subdomain means that Google could flag a violation for “non-matching domain,” which will result in the disapproval of your products, but this doesn’t always happen.

Therefore, this practice should be used assuming this risk.

Although it has worked in our tests, it is a borderline technique that may no longer be valid if Google updates its policies. Don’t use it on accounts where losing approval could have a big impact.

The problem: poorly managed variants in SEO

Imagine you have a product with 5 sizes and 10 colors.

That gives you 50 different URLs for a single product. In many e-commerce sites, these variants:

- Are linked from the main product page with <a href>.

- Are automatically generated with almost identical content.

- Are included in the sitemap.

And Google tries to crawl and index them as if they were key pages.

The result:

- Google wastes time crawling duplicate content or content without SEO value.

- Link juice is diluted among pages with no search intent.

- The positioning of the main product page is cannibalized.

What is Crawl Budget and why should you care?

Crawl Budget is the crawling budget that Google allocates to your site. That is, the number of URLs that Googlebot is willing to crawl in a given period of time. This budget is not unlimited and depends on:

- Domain authority.

- Server response speed.

- Internal structure.

- Number of errors.

- Volume of new and updated URLs.

If you spend that budget on unnecessary pages —such as duplicate variants— you are slowing down the crawling of the URLs that really matter.

The technical solution: move variants to a non-indexed subdomain

How Google’s crawl budget works

Before continuing, it’s essential to understand what the crawl budget consists of.

Googlebot has a limited amount of time and energy each day to visit and read pages on the Internet. That time is called “crawl budget.” It’s not that each site is assigned a fixed number of pages to review, but Google adapts this budget according to several factors. Here we explain how it works:

Key Components of the crawl budget

- Crawl Rate Limit: This is the maximum rate at which Googlebot can make requests to your server without overloading it. If your server responds quickly and without errors, Googlebot can increase the crawl rate.

- Crawl Demand: Google decides how much “interest” it has in crawling your site based on different factors. That is, if Google finds that certain pages are regularly updated or are very relevant to users, it will crawl them more frequently.

How is Crawl Budget Assigned?

Although examples with numbers are sometimes used to simplify, in practice, Google does not assign an exact number of daily URLs to each site. It’s a dynamic process that changes based on the health and performance of your server, as well as the quality and importance of your content.

If your site has many pages, but many are duplicates or of low value, Google may decide to crawl those pages less and focus on those that offer unique and useful content. On the contrary, if the site is very active and the pages are frequently updated, the “crawl demand” will increase, allowing Googlebot to crawl more content.

The main goal is for Google to be able to index the most relevant and updated content without overloading either the server or the indexing process. This helps users find quality content and helps the site itself function optimally.

So… why use a subdomain?

Because Google treats each subdomain as a separate entity, with its own Crawl Budget. That is, for each subdomain Google assigns a new crawl budget independent of the main domain without affecting it.

Separating product variant URLs on a subdomain allows you to better “organize” content so that Google can pay more attention to the main pages of the site.

Imagine your website is like a large library. Within that library, you have very important sections (like the main pages of your products) and other sections with more technical or detailed information (like product variants that in this case contain duplicate content). By putting the variants on a subdomain, you’re telling Google: “Here’s a separate area that can be explored independently.” This has several advantages:

- Crawl optimization: By separating less priority content, Google’s robot (Googlebot) can focus first on the main pages that have greater value for users.

- Better organization: Although a fixed number of URLs to crawl is not assigned, this separation helps Google better understand the structure of your site and, in that way, dedicate crawling time to what’s most important.

- Avoid duplicate content: By separating variants, you reduce the possibility of them mixing with the main content and causing confusion, which can improve the quality of crawling and indexing.

This way, if you move the variants to a subdomain like variants.yourstore.com, you are:

- Separating those URLs from the main crawl.

- Preventing Google from treating them as part of the relevant SEO content.

- Offloading the main domain from duplicate and low-value URLs.

In summary, using a subdomain for your product variant URLs doesn’t mean you magically gain “more” crawl budget, but you help Google better distribute its time to crawl and index the most relevant content on your site.

What if I don’t want Google to index the subdomain?

Perfect. Ideally, these URLs should not be indexed, but they should still be accessible for Google Shopping. That’s why:

Don’t block the subdomain in the robots.txt file

If you put Disallow: /, Googlebot won’t be able to crawl them for Shopping.

Leave them accessible for crawling, but control indexing by other means.

Add the noindex, follow tag to each HTML URL: <meta name=”robots” content=”noindex, follow”>

This allows Google to crawl the page (mandatory for Shopping), but prevents it from displaying them in organic search results.

This way you avoid having product variants appear indexed in searches.

Invisible links: how to avoid <a href> to avoid passing link juice

From the main product page, you should not link variants with <a href> if you don’t want Google to crawl them or transfer authority.

Instead, use something like this:

<span data-href=”https://variants.yourstore.com/red-shirt” class=”fake-link”>See red variant</span>

This is not a crawlable link. Google doesn’t follow it. It doesn’t crawl it. It doesn’t index it.

What if I want the user to be able to click?

You just need a small JavaScript function:

document.querySelectorAll(‘.fake-link’).forEach(el => {

el.addEventListener(‘click’, () => {

window.location.href = el.getAttribute(‘data-href’);

});

});

This way you have the best of both worlds:

- Google doesn’t crawl.

- The user navigates without problems.

Important! Remove variant URLs from sitemap and Search Console

Even if they are on a subdomain and have noindex, if you include them in the sitemap or upload them to Search Console, Google will try to index them.

Therefore you should:

- Remove all variant URLs from the XML sitemap.

- Verify the subdomain in Search Console only if you need it for Merchant Center. Do not upload sitemaps or request indexing to avoid unnecessary crawling.

If you need it for Shopping, you do need to verify it, but without submitting sitemaps or requesting indexing.

Can I use this subdomain in Google Shopping? Yes!

Here comes the interesting part. You can use a subdomain with noindex in Google Shopping, as long as:

- It’s not blocked by robots.txt.

- The page is accessible, loads well, and has a buy button.

- It’s verified in Search Console and Google Merchant Center, but as we mentioned, without uploading sitemaps or requesting indexing.

- The product feed points to those URLs.

We repeat: don’t use Disallow: / in robots.txt, but you can use noindex.

This allows Google to crawl the URL to validate content, price, stock, etc. But not display it in organic search results.

Why not use canonicals or parameters?

Many people try to solve this with rel=canonical or by controlling parameters in Search Console. But that doesn’t prevent Google from crawling those pages or from consuming your crawl budget.

With the subdomain + noindex + without <a href> technique, you get:

- Absolute control.

- Zero wasted authority.

- Lower risk of duplication or cannibalization.

- URLs visible only where you want them (Shopping, Ads, specific campaigns).

- Benefits of this SEO strategy

- Real optimization of Crawl Budget.

- Preservation of link juice on the main product page.

- Reduction of duplicate or cannibalized content.

- Cleaner architecture that’s easier for Google to understand.

- Total compatibility with Google Shopping.

When not to apply this technique?

You shouldn’t move or block variants if:

- They have their own search intent.

- They receive external links.

- They are pages with unique content (photos, descriptions, specific reviews).

Do a keyword study: if “red XL jacket” has significant search volume, you might be interested in positioning it as an independent page, and creating the URL on the main domain without taking it to the subdomain.

Here you will have no choice but to analyze case by case and see what suits your project best.

In Conclusion:

Moving product variants to a non-indexed subdomain, without traditional HTML links, and using them exclusively for Google Shopping, is an advanced technical SEO strategy that offers enormous advantages if you manage large catalogs.

It allows you at minimum to:

- Control how Google crawls your site.

- Avoid wasting authority.

- Improve crawling speed.

- Centralize positioning on key URLs.

And continue using those variants for Shopping and Performance Max campaigns.

This way you can exploit the best of both worlds (Blue Links and Shopping) without giving up anything.

We have documented everything well without leaving anything out, including the main risk mentioned at the beginning with subdomains. Using it is not without risks, but the gains can outweigh this drawback.

We hope that this technique can be useful to you and that you decide to implement it.

If you need help with implementation or want to make an inquiry, don’t hesitate to get in touch.

References

- Crawl budget management for large sites. (n.d.). Google for Developers. Retrieved April 3, 2025, from https://developers.google.com/search/docs/crawling-indexing/large-site-managing-crawl-budget

- Moreno, P. (n.d.). Complete guide for your Google Shopping feed. Adsmurai.com. Retrieved April 3, 2025, from https://www.adsmurai.com/en/articles/google-shopping-feed

- Product data specification. (n.d.). Google.com. Retrieved April 3, 2025, from https://support.google.com/merchants/answer/7052112?sjid=4253500746648663729

- What crawl budget means for googlebot. (n.d.). Google for Developers. Retrieved April 3, 2025, from https://developers.google.com/search/blog/2017/01/what-crawl-budget-means-for-googlebot

Alvaro Peña de Luna

Co-CEO and Head of SEO at iSocialWeb, an agency specializing in SEO, SEM and CRO that manages more than +350M organic visits per year and with a 100% decentralized infrastructure.

In addition to the company Virality Media, a company with its own projects with more than 150 million active monthly visits spread across different sectors and industries.

Systems Engineer by training and SEO by vocation. Tireless learner, fan of AI and dreamer of prompts.