Artificial Intelligence (AI) is revolutionizing the way we create content, and video is no exception.

Thanks to AI, marketers and communications professionals can deliver much faster and more engaging content to their respective audiences at a fraction of the cost.

The same is happening with video.

It’s still a bit tricky, but… Who remembers the days when you needed a camera and a TV set to record your own show?

That is a thing of the past.

And this can certainly happen with virtual studios and video edition programs thanks to the AI.

In a very short time, it will almost certainly not even be necessary to have recorded footage to produce high quality videos in a fraction of the time.

In fact, we can already count on AI tools that allow us to convert text into images, generate audio automatically and edit text more efficiently.

But what if you had a tool that could do it all automatically?

Well, it’s already possible thanks to this little script that will allow you to generate AI videos on autopilot.

Ingredients: What you need for the creation of a video with artificial intelligence

But how are we going to create this video automatically?

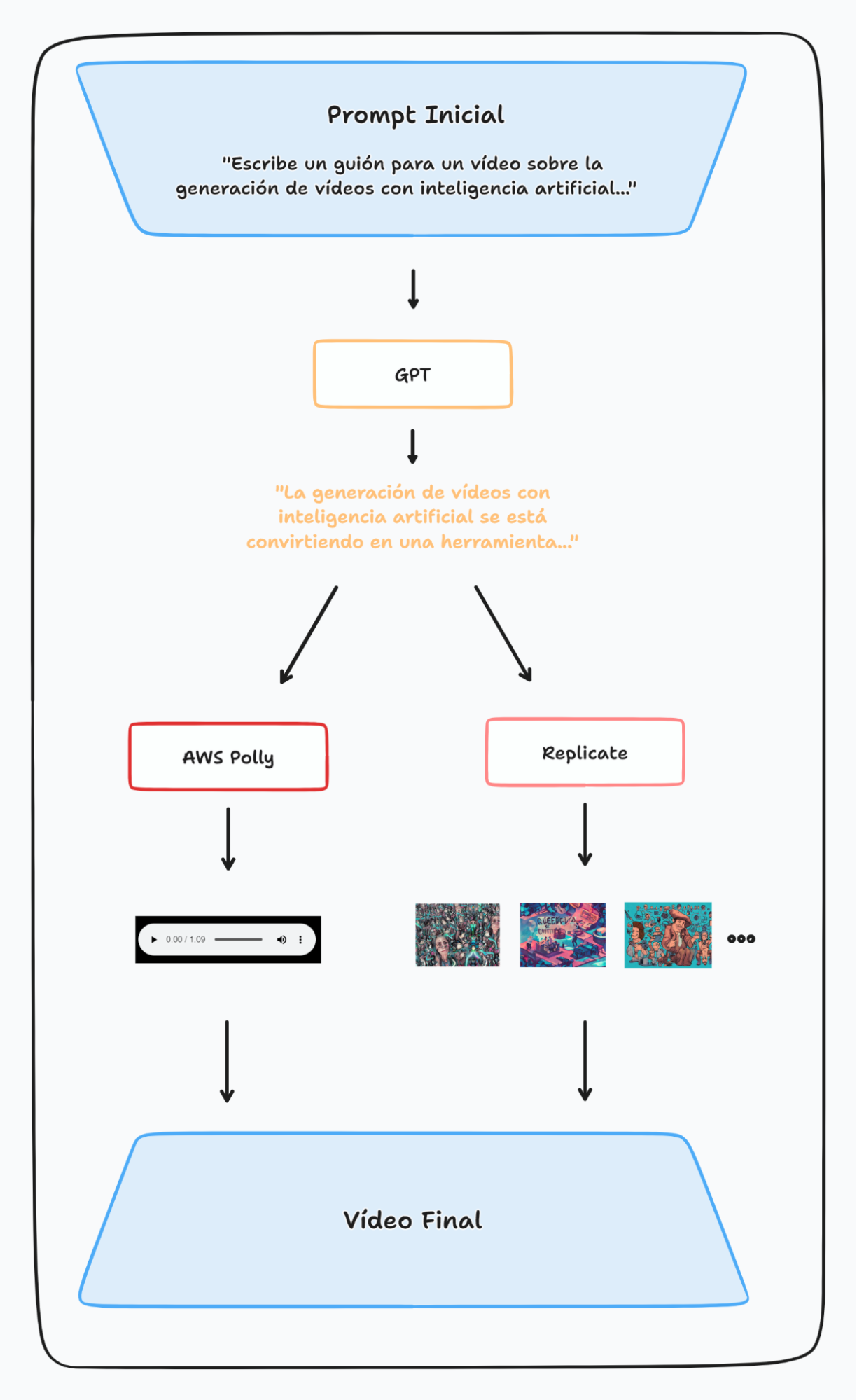

Well, it is necessary to chain three different APIs:

- When creating a video, the first thing we need is the content. To do this, we generate the text with the OpenAI API and the GPT 3 model, although we can also opt for GPT or another superior model. There are other alternative models that you can use, such as Bloom.

- Then we will convert that text into speech. To do this, we will use Amazon Web Services (AWS), one of the most common APIs, and we will use its text-to-speech library, Polly. The main advantages of Polly over other tools is that it is low cost and very easy to use. Because it is easy to use and inexpensive.

- Finally, we transform text into images. We will use static images, we will not generate a video, although there are many advances in this field. For this, we will use the Replicate API and the StyleGAN model.

Here is an outline of the functions we are going to use, the API and the concrete model.

How to make videos with artificial intelligence

The input for our video will be a prompt in which we will specify the theme of our video.

We will tell GPT-3: “write a script for a video about video generation with Artificial Intelligence”.

The GPT3 model will return a script and we will tell Polly: “take this text and generate a voice”.

This will give us an audio document, now we have to make a request to Replicate: “For each sentence, separate the text into dots and generate an appropriate image over that text”.

This will give us a list of images. We will then put the audio and images together into a final video.

Content script functions to categorize keywords:

Now, we are going to explain you what tasks are executed by the script that our Head of SEO, Alvaro Peña de Luna, has programmed, based on the script generated by @DavidGarciaSEO

Es cierto que a veces aparecen imágenes algo "raras" pero es cuestión de que la tecnología avance o probar con otra API diferente a Dall-e.

— David García (@DavidGarciaSEO) February 10, 2023

El script lo tienes aquí (no funciona en colab): https://t.co/MSsTmYhB1M

Te recomiendo ver antes este vídeo: https://t.co/S9fXlCXMw8

Colab performs a number of specific tasks, which I will describe below:

- Collects a prompt: Colab collects a prompt or a text suggestion that is provided to generate content.

- Generates text: From the prompt, Colab automatically generates text using pre-trained artificial language models.

- It gives it a voice: Colab then uses a speech synthesis program to convert the generated text into a synthetic voice.

- It creates the images for each of the sentences: For each of the generated sentences, Colab creates images that correspond to the visualization of the sentences. These images can be graphics, illustrations, or any other image that is relevant to the generated content.

- Concatenates the images: Next, Colab concatenates all the images generated for each sentence and joins them into a single image that represents all the generated content.

- Creates a video: Finally, Colab, uses a video editing program to create a video in which the image generated for each sentence is shown together with the synthetic voice that reads them.

Steps prior to the execution of generate videos con IA

The first thing to do is to open the Python code in your browser using Google Colab.

Now, we must have access to the three APIS we are going to need. If you are a follower of our AI list on our YouTube channel or our blog, you will already have the first access created.

- OpenAI API

- Polly login and password

- Replicate API

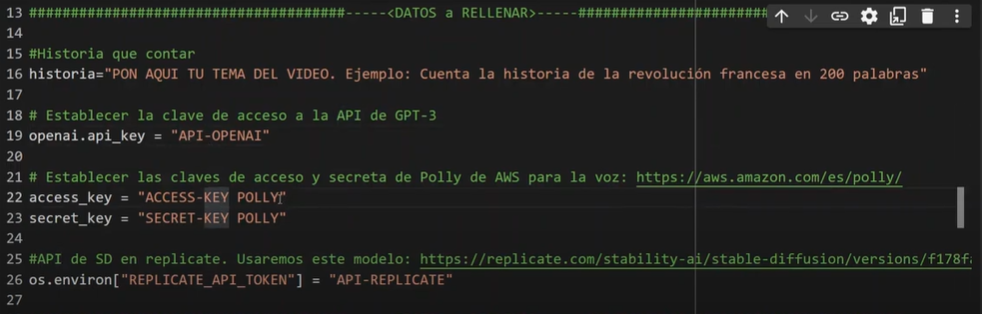

Once we have all of them, we’ll need to enter them in the following lines of code:

Once we have all of them, we will have to introduce them in the following lines of code:

Let's go to our Colab and run script

In order to create a video in a fully automatic way and, of course, with our super helper known by its nickname IA, we will follow these steps::

- We create a client for the OpenAI API, using standard Python libraries for API use.

- We write the initial prompt on line 16 of the Phyton script.

- We will provide the necessary access keys for the API input and output and choose the parameters to use. Remember: you can play with the model, temperature, maximum number of tokens to use…

- With the prompt text, an audio will be generated thanks to the Polly client we have used, which will be saved in MP3 format.

- We will calculate the duration and separate the text into sentences to create an image for each of them. With this data, the duration of each image will be determined.

- Using Replicate’s Stable Broadcast API, an image will be generated for each text fragment, adjusting the parameters as necessary.

- Check that everything is correct and save each image in the content.

- We concatenate the images with the set duration and add the corresponding audio.

- The result will be saved in MP4 format and can be downloaded.

In the video below, you can watch each step in detail and you can also see which lines of code you can modify to adjust different parameters according to your needs.

This content is generated from the audio voiceover so it may contain errors.

00:00) very good welcome and welcome to a new video of social web I am Luis Fernández and continue with the series of videos on artificial Intelligence and automations explaining Something súper chulo in this video The truth go to do a Script that from a Chrome that generate we in which we mark a thematic concrete goes us to generate a complete video with images contained and voice related by voice simply with giving him the click this is a Script generated by Álvaro Crag and is based in a Script generated by David García

(00:29) have in the video and in the description of this own video the háms of Twitter so that you can follow them since they publish things chulísimas of artificial Intelligence if it interests you the subject do not fail by here and also remember you that have a series of videos of artificial Intelligence a Playlist here in the Canal of social web recommend you throw him a glimpse because this video is a bit more complex there are more pieces moving in this video that the anterior by what if you want an explanation step by step of how execute

(00:56) a Collage that is python etcetera have the anterior videos are also actions quite basic but súper chulas and that serve for a very good base to the hour to do this type of tasks So I recommend you see them and go to go to go in in details already basically go to generate a video of form totally automatic how go to do this go to chain three apis distinct have prepared you here a diagram since this video is a bit more complex And have here a column in which it marks us the function that want to make

(01:27) another apply it to use and another the concrete model Then what need for a video need first the content that it goes to speak the video then need a program of generation of text are to do with the Api of openiye and the model goes to be gp3 in this case always can use gpt or an upper model if you see it more advance in the time even alternative models like Bloom the thing is that we need to generate text afterwards have to spend this text to voice for this go to use

(01:55) aws that it is Amazon web Services that also is another of the apis no of the apis of the most usual services of internet by what will come us well know use it and go to use poli that is his bookshop of texts Pitch of text to voice exist others is seeing A lot of advances on this whisper by openila and also that it does not do text to voice but voice to text but expect that there is big improvements in this sense in this case go to use poli because it is quite easy to use and economic and finally

(02:24) we are to spend this text to image need to generate some images in this case go to use static images do not go to generate a video but is seeing A lot of advances by here so you are attentive to a future because the same we can #take a modification for this go to use the Api of replicate and the model of style diffusion as you see are three apis that go to have to fit in our case the input for our program Goes to be an initial in which we say him the thematic of our video goes to be for example

(02:52) write a script for a video on the generation of videos with artificial Intelligence is a bit dip go to do a video on generating videos But can remain very chulo this pront the only that go to have to write this go to spend to gpt3 and goes us to give a result a script in this case would be the generation of videos with artificial Intelligence is #turn a very used tool etcetera etcetera have the text and do with him first place amass it to him to wspoly say Hears takes this text and generate

(03:23) a voice this goes us to give a document of audio Here have the example do not go it to give the Play because I want to teach you the complete result but would have the audio save the audio and On the other hand with the same text go to spend to this equal diffusion through réplicate and go to say voucher by each sentence go to separate the text in points generate us a suitable image on this text goes us to generate a listing of images and go to our ours audio images and go it to joint in a video

(03:52) final and east would be the result Here have an example go him to give to the Play a bit so that you see How sounds the generation of videos with artificial Intelligence and to is #turn a tool of creation of content increasingly popular This is due to that the ia help to the creators of content to create videos faster and with main precision the ia can help to the creators of content to edit animate and compose videos with less endeavour is not súper optimised here can dip him a lot of varieties in a lot of type

(04:25) of to the hour to generate images there is a quite Interesting work as if you have been following Like this a bit in a video is almost an art by himself same go you to teach replicate here have his main web and here have the model stable diffusion inside replicate have a playground This goes to be linked inside the Script of python in which you can include the Front here for example have put him the United video by YouTube/Youtube that is to say a guide doing videos for YouTube/Youtube This is the result that has given me But

(04:56) as you can see the pron can be much bigger already knows The prons of sobretener especially in diffusion benefits a lot to Add a lot of terms type HD and similar benefit a lot also to Add negative tests can play here with a pile of values once will give him click to submit and here can include doing tests before executing it see that it is executing to see the result and see if they go you squaring the videos or do not go to expect a poquito of load that goes quite fast Here is and here have the image

(05:35) it is not very good the truth the pront is a pron improvised quickly the ones of our video are something better the truth is that to me look me a past especially for this type of explanatory videos have images like this abstract related is very very chulo and in inside reply and take advantage of you now to complicate me a bit have a pile of models once learn to use this app and generate yours here have for example this equal diffusion but have blip 2 that allows you answer questions on

(06:02) images have whisper of opening also if it likes us the pencil of opening have access whisper here have a pile of models and is the truth that is a startup because it is working very well so I will throw him an eye And have the final video go to spend to the code and if you want to do a review fast and how execute all as always they Execute cell cell in this case go to have Only two cells one to install all the dependencies see that we use the bookshop of python of openite this bookshop goes us to allow

(06:34) generate the video is a bookshop súper used ff Have also boto3 that it is to connect us to Amazon web Services have Pilot also to manipulate images have the bookshop of replicate Here go to import all the necessary bookshops together with some already included in python and in this section that see that it puts data to #fill have all what go to need touch only these lines of code Go it to have to modify here dip the pront initial to continuation your piropenia and your Access

(07:12) with all these results here have the links to generate your necessary keys simply generate them use them and remember that all this has a limit and free accounts but Do not go to be able to generate of boundless form free Go to have to dip a charge card and once #fill all these data the rest is code will be able to give him to the play and would execute a result Final That if we go here would download you a title called video if there is point mp4 and already would have a result like which I

(07:43) have here this can automate it and go dipping it inside a loop and iterating the proms initial and as generate tens of videos if you want to and tools take you this like a base This is the most basic that can do from here can go improving the code and achieving things chulísimas and to end up a bit finally go you to explain what does each part of the code Like this a bit by on For which have curiosity or those that want to modify it so that you know where can go touching

(08:12) once it #fill the data by here begin with the call to pencil of 3 this as I said you before can update it to chat gpt will have to change a bit in format including him in Jason what would be the agent to throw gpt more the anterior text more the response that want that it generate you and could go chaining even there is a lot of do not go me to coil now But know that Concha gpt there is a lot of chicha is cheaper and can optimise it quite here always can play also with the temperature agree you that it is like the

(08:47) determinism of the model can go down it or go up to your taste and can play with the number of tokens to continuation have the result This goes to be the response take the correct format and remain us Only with the response with the text the response and to continuation created a client for the Api of poli is very similar if you fix you the use of apis especially here in python that is standardised in bookshops is very similar choose what go to use in this case gives 23 in this case poli have ones some Kiss of

(09:20) access in this case in opening Already have included it previously Leave me see where here fix opened and plant it but basically is necessary want to of the Api in the keys of access and if there is a input in the Api in this case for example go him to spend here this is the client as we go to create client Looks spend him our text the text generated with the link that want and the app that want But always goes to be some inputs and some wished and a type of authentication then with this call to the client that have created the

(09:57) app goes us to generate the audio here save the audio in formateamos a bit to be able to use it in python open it and save it to us in a file voice. Mp3 to continuation calculate the length and what go to do is to separate the text that had of before by points what counted you do an image for each sentence and with these two data of length and fragments go to create the length by image go to save us this data to say him afterwards in the creation of the video Hears want to that each image last

(10:30) so much time Here go to do the last call that remains us go to call to estability stable diffusion inside replicate go to a version in concrete of the model and by each one of the fragments of text that have generated go him to spend this fragment of text with a series of parameters that as I said you before the best is that you go you here and play a bit you In advance and try the measures that want to do all this big call go to show us the image so that we see it to the measure that go generating

(11:06) we do a series of requests and of comprobaciones to see that it is all correct And if they #give a 200 go us to save the image save the image with name image she and here by each repetition go to add him one that is to say goes to be the image here there is one for second repetition That is to say for the second text the image here there is two etcetera and save it in our content verify that it is all created correctly and finally go to do the creation of the video for this go to do if you fix you here

(11:39) here we have a variable called video in which we do a concatenible videoclips go concatenating the distinct images with the length that have marked and to end up add him the audio video then audio and all this save it in format with the codes h264 in MP4 mark the fps put Him the name and save it to us and Finally can it to us go down And this would be everything is a bit dense eat us always pyzone is a quite comprehensible language if you control you a bit of English and go reading little by little can infer the

(12:16) that does each thing and this is everything here have The example of the video will leave all written in a post among the social web web in case you want to revise it calmly If the video is very fast and already know any doubt can it to us comment in the commentaries and are to help you a greeting and thank you very much by your time

Download Google Colab and create your own videos

This is the script we used in the previous video: Go here to Google Colab

Give it a try and tell us what you think.

Or even better, share your work with us, so we can see the results alive.

Here is the result obtained

The result of the video is not very good because the prompt used in this case: “AI generating videos for youtube” is very poor.

Keep in mind that the prompts used in the colab script are much more elaborate and will give you better results than those seen in the video tutorial we have recorded.

The important thing, in short, is to get the idea of how it is possible to generate a video automatically.

Also, if you know python, you can touch our script and improve the prompt to get a much better elaborated video for your purposes.

Create professional and quality videos with the help of artificial intelligence!

Alvaro Peña de Luna

Co-CEO and Head of SEO at iSocialWeb, an agency specializing in SEO, SEM and CRO that manages more than +350M organic visits per year and with a 100% decentralized infrastructure.

In addition to the company Virality Media, a company with its own projects with more than 150 million active monthly visits spread across different sectors and industries.

Systems Engineer by training and SEO by vocation. Tireless learner, fan of AI and dreamer of prompts.